Advai Platform

Your solution for testing, monitoring and trusting AI

Our platform supports every stage of AI adoption, from selecting the right model, to proving it is ready to go live, to keeping it safe, secure, and reliable in production.

Testing

Test for production readiness, not just performance.

Benchmark models and configurations quickly, against real constraints

Red Teaming & Adversarial testing you can trust, grounded in repeatable methodology

Tests shaped to your use case, not generic leaderboards

Go-live thresholds and evidence packs, supported by expert guidance

Model Arena

Model Arena speeds up and strengthens how you choose AI models, vendors, and configurations. Dynamic benchmarking measures performance, safety, and security under your policy, latency, and cost constraints. The result is clear selection evidence you can use for approvals and procurement, without relying on marketing claims or pitch decks.

Insights

Test Plans align to your governance, risk, and compliance requirements. You get traceable results, versioned thresholds, and audit-ready records to support sign-off.

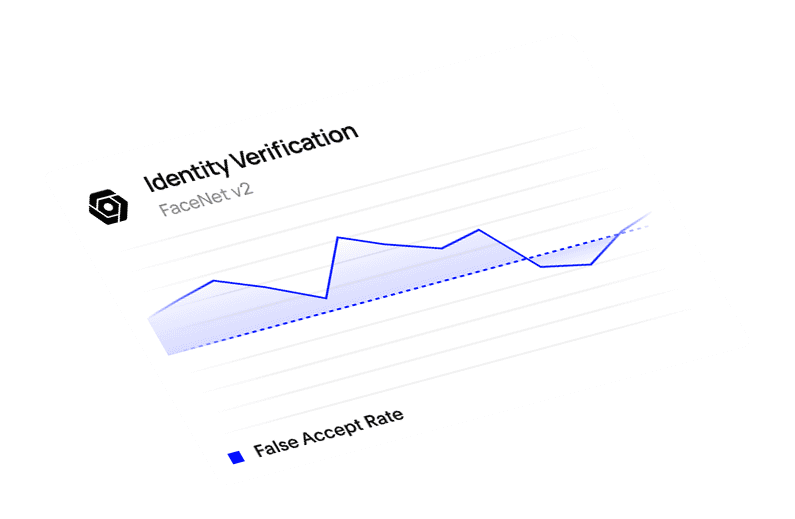

Monitoring

Keep your AI safe, secure, and reliable after launch.

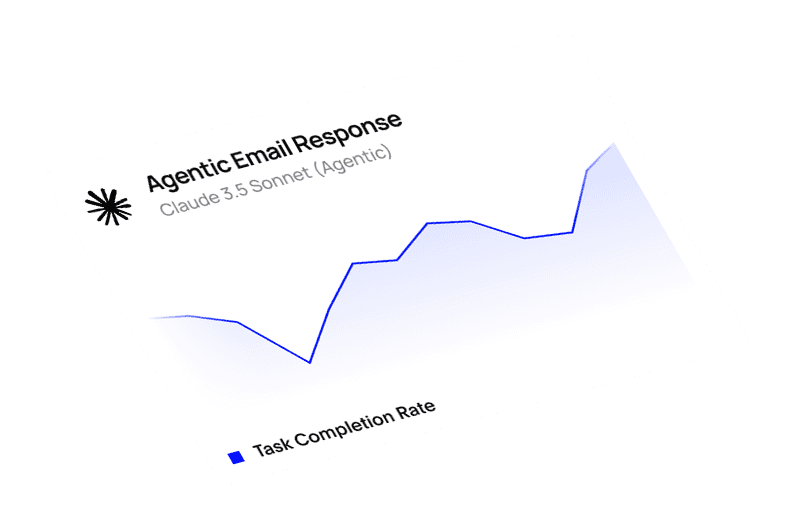

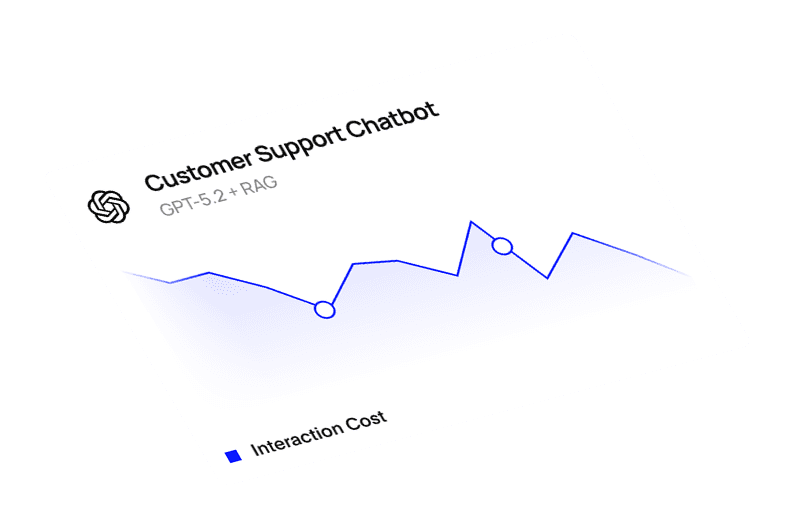

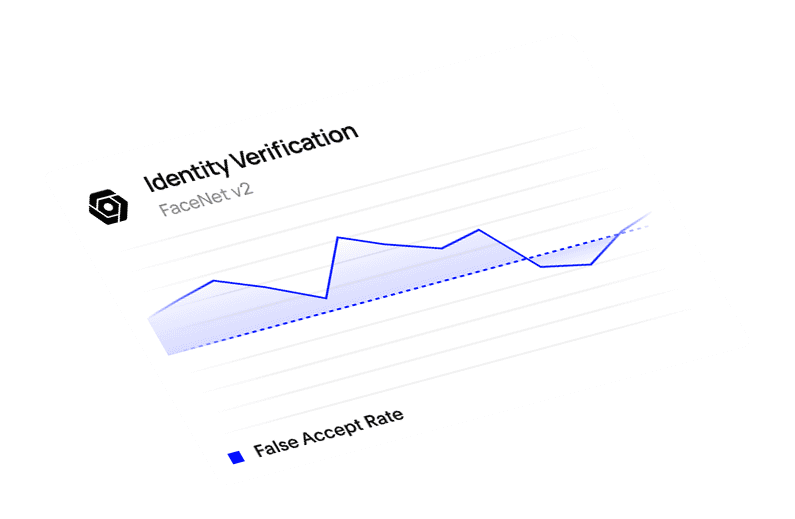

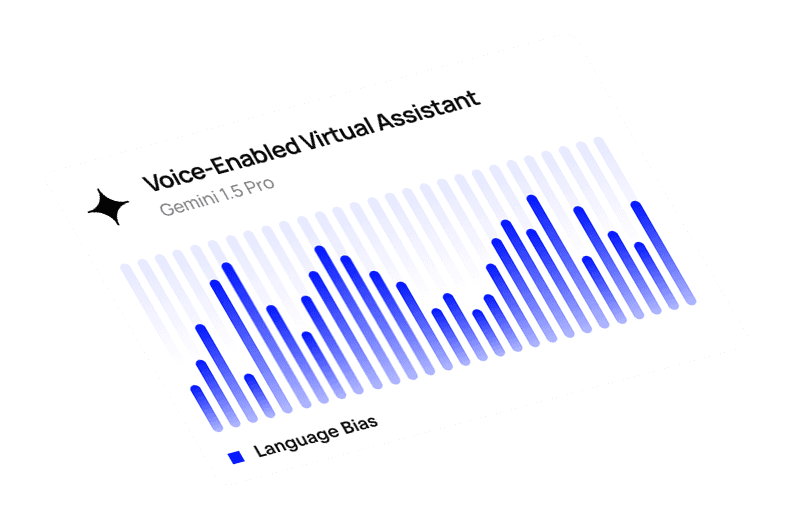

Real-world behaviour tracked continuously

Early warning on drift, degradation, and emerging failure modes

Alerts for policy breaches, security threats, and data leakage risks

Cost and tool use signals that prevent surprises

Monitor

Comprehensively monitor multiple AI systems by assessing their logs for key performance, risk and security indicators

Onboarding Your AI

Managed setup to assess use case risks, define thresholds, and configure your initial tests and monitoring.

Features

Coverage across AI systems, from agents to multimodal. Whatever you are deploying, Advai enables you to Choose, Test and Monitor for that system type.

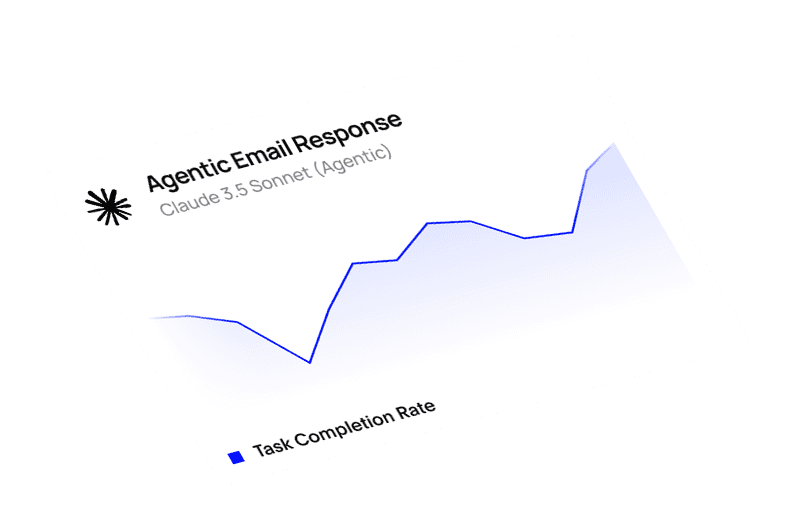

Agents

Autonomous systems that use tools and take actions. Test multi-step behaviour, tool misuse, and unsafe execution paths, then monitor real world tool use and escalation signals.

Large Language Models

Compare model options for your task, test safety and security boundaries, and monitor policy adherence and drift in production.

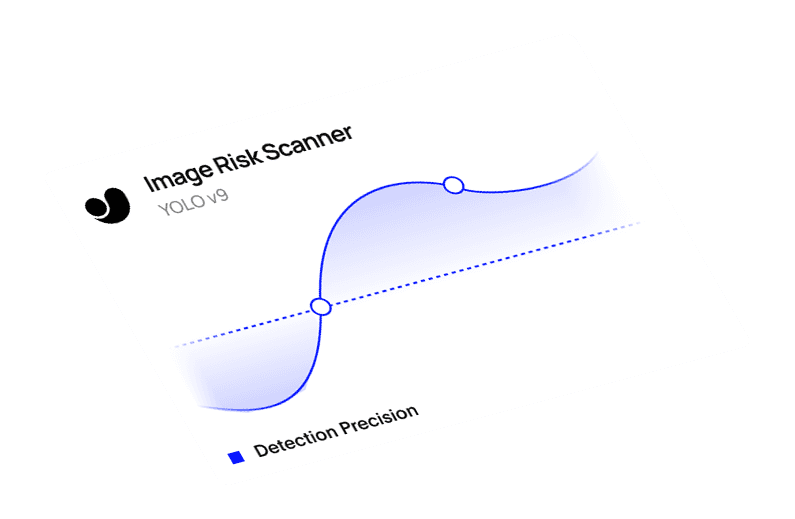

Computer Vision

AI that interprets images and video in high stakes workflows. Choose based on measurable performance, test robustness and edge cases, then monitor for degradation as conditions change.

Time Series

Forecasting and detection over dynamic data. Choose models under latency and accuracy constraints, test reliability under shift and seasonality, then monitor drift and regressions over time.

Multimodal Systems

Text, image, audio, and video combined. More capability and more complexity, so Choose and Test need broader coverage and Monitor needs richer signals.

Security and quality

Certified to ISO 27001 and Cyber Essentials Plus

Your questions,

answered

Q

Do you provide the model?

Q

What do we get from Advai?

Q

Where do results and alerts show up?

Q

Can you help if we do not have in house expertise to run testing?

Q

How is this different from static benchmarks or generic eval tools?