WHY MLOPS IS NOT ENOUGH

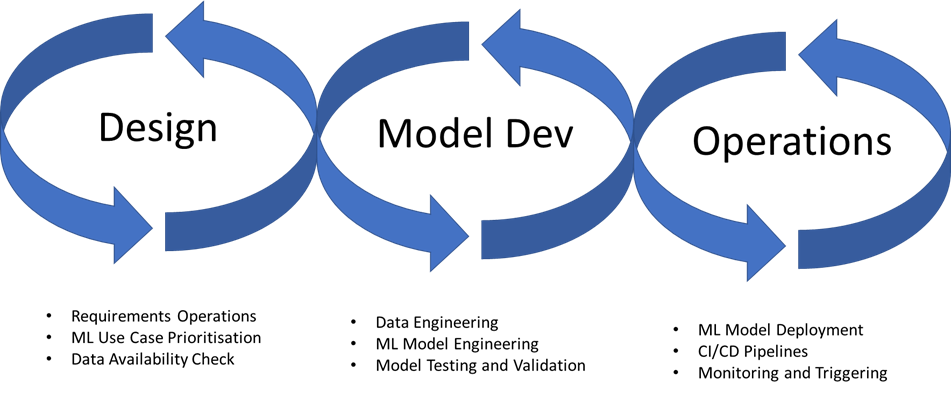

MLOps is a welcome addition, but is ultimately limited in its scope as it still looks at a more traditional approach to development of systems, rather than the systems and datasets themselves.

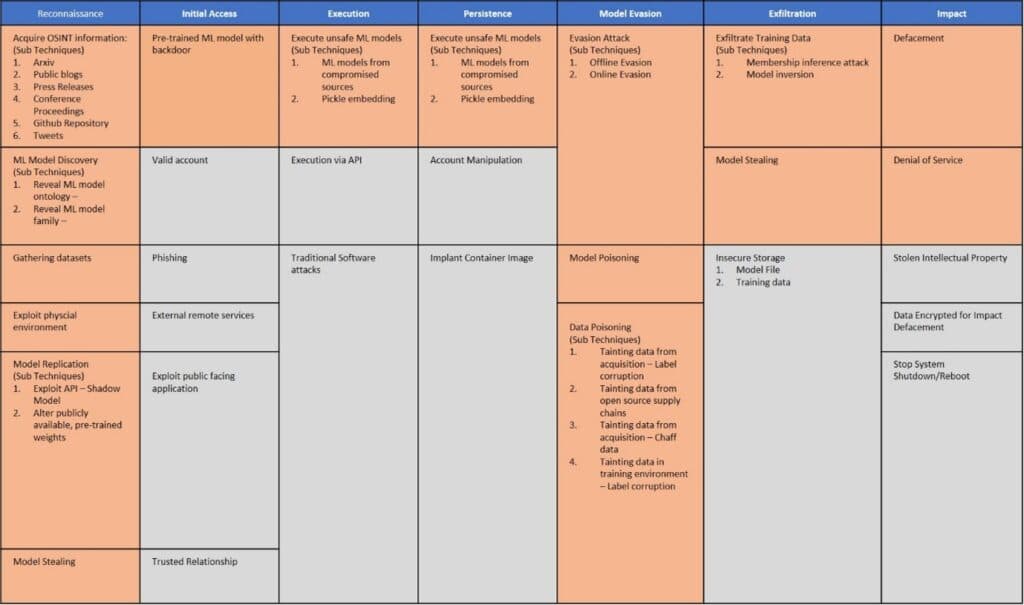

AI and ML applications, depending on how they are implemented, use a combination of complex algorithms, optimisation functions, multi-dimensional vectors, reinforcement, or hybrid methodologies. These complex systems are susceptible to Adversarial AI/ML attacks – imperceptible manipulations of inputs or training data that change the system’s decisions or confusion it entirely.

What’s more, it is also possible to steal models based on how they respond and then use those responses to rebuild the sensitive training data that was used to create them or recreate their logic. These are all issues not currently addressed by MLOps.

Defending Against Adversarial Attacks

Current MLOPs processes focuses on optimising AI/ML models by reviewing them against:

- Accuracy, Precision and Robustness

- Bias Identification

- Model Drift

- Explainability

If applied regularly, these techniques can help maintain a robust model but do not resolve the underlying issues that make them vulnerable to attacks for model theft, data theft, denial of service, fraud, or deception. That’s where you probably want help.

If you are interested in talking to us about the work that we’re doing to protect AI/ML systems and whether we can help you, get in touch through our website or by emailing us directly at contact@advai.co.uk.